The Long Island Power Authority has observed that each step of classic asset management methodologies seems to lead to a need for increased and improved data management, data mining, performance analysis, work prioritization or data process automation tools. This need, combined with the evolution of more data streams scattered in various systems, the growing demands for real-time analysis and more thorough forecasting, makes a next step inevitable.

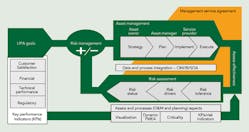

That next step will combine, orchestrate and automate the various classic tools along with significant real-time data streams to provide powerful operational recommendations while helping the utility manage risk, improve forecasts and optimize decisions. The Long Island Power Authority (LIPA) calls this overarching new process dynamic asset risk management (DARM).

The Viewpoints

Ultimately, DARM will prove to be a helpful decision-enhancing tool at all levels of the organization by providing timely and appropriate information to operators, asset managers, reliability engineers, financial decision makers and executives. Utility data is inherently abundant and complex; therefore, it must be selectively considered from different viewpoints and levels of detail, depending on user needs.

LIPA has chosen the following general viewpoints for risks and goals in its transmission and distribution business:

- Technical performance

- Financial performance

- Regulatory compliance

- Customer satisfaction.

Putting Risk into Play

An example of how LIPA uses risk assessment can be found in the capital investment program, where risk is measured in six to eight risk categories under each viewpoint. For example, through that process, a reliability improvement or an end-of-life asset replacement project (technical performance) can compete with a billing system improvement (customer satisfaction) or a project with a quick payback (financial performance) for funding. Clearly, the viewpoints used by implementing utilities and the more detailed categorizations can be utility specific.

There are several illustrative examples of where DARM might be applied to a utility’s normal operating business risks:

- Vegetation management on a line is at normal cycle end

- A substation battery is near end of life

- A distribution breaker does not always reclose properly

- There is a highly loaded circuit

- Protection relays have exhibited problems and are being phased out

- A thunderstorm is on the way.

These items are examples of real business issues concerning programs such as storm hardening, maintenance, planning, capital spending, customer satisfaction and the timing of any of those programs. While each of these risks can exist at any time relative to a particular asset, what if three, four or five of these exist in the same place with the same set of assets? Importantly, although a real-time coincidence of these risks would be a concern, it is perhaps more important to forecast these coincidences so the risk can be avoided altogether. Utilities often miss the opportunity to get ahead of these types of cascading or increasing risk problems.

What Is DARM?

DARM is an overarching asset and risk management system that will provide real-time operational information, management decision-making analysis and forward forecasting for decision support. DARM will provide the ability to look across all aspects of the operation of assets to fully assess risks and provide enough coordination to mitigate them at the lowest cost or with the best solution.

DARM will be a real-time user of data streams. It will be both an administrative overlay and a merger of business processes, tools and methodologies. It will be both visualization technology and a forecast tool. LIPA has not yet put all the components that make up DARM into place. However, each building block placement and each technical improvement will be a stepping stone to the larger vision.

Data Integration and Information Management

LIPA’s first step with DARM was based on the one-too-many integration solutions where enterprise data sources ware mapped to and integrated with the single data model in the Electric Power Research Institute’s (EPRI’s) Maintenance Management Workstation (MMW) model.

MMW provided LIPA with tools for work prioritization back in the early 2000s. Subsequent pilot projects focused on testing the use of the common information model, which was emerging as the standard for the industry’s data modeling. Implementation included development and performance evaluation of infrastructure using a utility integration bus. These early projects demonstrated the viability of data integration and process automation but were often cumbersome and custom built.

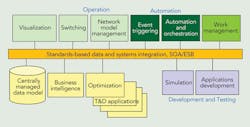

Today, LIPA’s enterprise information management solution includes the common information model as a base for data standardization. LIPA’s technical information technology (IT) architecture solution uses an off-the-shelf enterprise service bus (ESB) as a part of its service-oriented architecture to manage data integration and data exchange between critical systems. This also provides a virtual database for business intelligence data mining and analysis. ESB is designed to support process automation and will enable future orchestration of applications in combination with complex event triggering.

Central Data Model and Automation

One of the key elements of LIPA’s enterprise information management concept is a centrally managed data model. The key enabling solution for cost-effective data integration is an automated data model management tool. This tool keeps the data model current with evolving industry data standards in a way that is practical for use across critical systems and a large number of interfaces in a useful, manageable and cost-effective way.

LIPA plans to complete the transition from nonconforming to standards-based data and systems integration over the life-cycle time of critical systems. All new systems and integration interfaces to legacy systems will be based on current industry standards.

It Is Here

LIPA has worked extensively within the industry to develop the thinking around these concepts. Most of the early adopters of reliability-centered maintenance in the 1990s discovered maintenance optimization and maintenance work prioritization are just the tip of the opportunity iceberg for asset and system performance improvement.

The first EPRI conference on T&D asset management was held in 2000 in New York, New York, U.S., and hosted by Con Edison. The event was so popular with utility managers and experts that participation was necessarily limited because of the high level of industry interest in the topic. At that time, LIPA was one of a handful of U.S. utilities working to define T&D asset management through a collaborative EPRI project. In the mid-2000s, LIPA took a further step, defining its asset management approach as risk-based asset management. LIPA began to see the need for a more complex and predictive approach by defining it as dynamic asset risk management.

LIPA has been making a step-by-step transition to standards-based IT infrastructure, which has proven to be a lower-cost and lower-risk approach. The process is centralized and automated to the maximum degree possible, ensuring consistency and efficiency. The practices LIPA uses in request for proposals, vendor selection and product selection require industry standards compliance and interoperability. Internally, the commitment is to build and maintain T&D IT infrastructure in compliance with industry standards. Integrations also are designed to leverage benefits of near plug and play for standards-based systems and applications. Together, these transition strategies help to lower risk, lower cost and enable using best-of-breed products from various vendors.

Need for New Tools

Key elements of LIPA’s DARM concept include the use of probabilistic methodologies in assessing and optimizing risk of achieving the goals. The dynamic part of DARM indicates the need to perform risk assessment and optimization in a near-real-time and continuous way.

More extensive use of the planned methodologies is sometimes limited by a combination of practical computational problems. The latter includes the long computing time required to perform complex studies with large numbers of calculations using combinations of possible values of study parameters. Today, even existing deterministic studies and tools require hours and, in some cases, days for relatively simple study and optimization tasks.

In those situations, it is expected some combination of high-performance or cloud computing with more effective probabilistic calculation methodologies will need to be applied to focused aspects of use cases of interest. LIPA, again, is taking a step-by-step approach in developing and implementing new technology. This includes the use of more powerful computers and cloud computing, along with existing deterministic tools and improvements in methodologies for probabilistic calculations and analysis. This approach also anticipates the need to develop new, scalable tools and methodologies to improve efficiency and reduce the required computing time for complex and multi-parameter optimization use cases.

LIPA has started working with Brookhaven National Laboratory (BNL) to leverage the availability of its supercomputer and cloud computing, and to coordinate future efforts with BNL’s probabilistic risk assessment team. The resulting capability eventually will run existing deterministic and probabilistic tools faster and more frequently, closer to real time and in probabilistic mode.

A jointly developed road map includes evaluating options to reduce the number of iterations of some models while still obtaining results that have the required accuracy. Further improvement is expected by developing methodologies and optimization algorithms to perform focused studies for limited and specific operating areas and conditions. Longer-term planned activities include the customization of tools used in other industries and the development of new and specialized tools.

Next Steps

The current LIPA infrastructure includes the ESB and supporting components to support extensive process automation. Future upgrades will include ongoing implementation of service-oriented architecture and complex event processing to enable further process automation and solutions based on the need to support specific and prioritized applications of DARM.

Some of the planned use cases will require automation and extensive computing power to perform many runs of studies. These runs will use a wide range and combinations of parameters to identify, for example, an optimum schedule for planned maintenance of transmission lines that will optimize the cost of labor for regular and overtime work, account for fluctuating energy pricing and eventual loss of revenue, and take into account risk and impact on system reliability and customer satisfaction performance goals.

In an approach similar to the current risk assessments of capital projects, LIPA is planning to develop risk models of individual circuits and system components. These risk models will take into account asset condition and predictive probabilistic performance of individual components of circuits and their components. The models will include, for example, status and maintenance history, performance of protection and communications systems, anticipated operating condition, asset design and performance data. This probabilistic risk assessment will be dynamically updated as changes in operating and asset performance are forecasted.

Other use cases will require real-time monitoring of operating parameters and will need to anticipate operating conditions and day-ahead (or even hours and minutes ahead) load-delivery requirements. One example of use cases would combine forecasted weather, load and expected reliability, and risk of failure of any number of critical assets to forecast and optimize load pocket must-run generation. This use case illustrates a risk optimization scenario with a potentially significant impact on system reliability, cost of system operation and impact on customer satisfaction, and will require adding solutions for complex event triggering and orchestration of multiple applications to LIPA’s infrastructure.

Probabilistic approaches use combinations of statistically possible values and provide better understanding of ranges of possible outcomes with related probabilities and confidence levels. Current deterministic processes and criteria are clumsy and obsolete. It is clear better probability-based risk models are needed. Such models will provide better understanding of risk associated with various and possible combinations of critical parameters, as well as overall risk of complex systems that have many independent and interrelated risks parameters.

To maximize benefits, risk optimization needs to be done simultaneously for key performance areas. For example, in the earlier described use case of optimizing day-ahead needs for must-run generation, probabilistic reliability of specific circuits and their specific individual components needs to be combined with probabilistic energy pricing for specific/probabilistic operating conditions and should include probabilistic assessment of impact on specific customers.

Conceptually, a multi-parameter optimization approach is applicable for both short-term operational decisions and longer-term capital investment decisions. Analysis of various possible combinations of individual parameters used in probabilistic studies is more likely to uncover high-risk operating scenarios that may not be identified with a deterministic

approach.

Predrag Vujovic ([email protected]) is the director of T&D planning at the Long Island Power Authority. Since joining LIPA in 2007, his responsibilities have included development and implementation of tools and methodologies for asset and risk management, risk-based capital projects selection and development of LIPA’s smart grid road map. In a prior position at EPRI, he managed research and development projects focused on integrated equipment monitoring and diagnostics, asset management and reliability-centered maintenance.

Mike Hervey ([email protected]) leads Navigant Consulting’s service offerings related to T&D business strategy and performance improvement. Throughout his career, he has been involved with multiple process improvement and organizational development initiatives. Most recently, he was COO and acting CEO of LIPA. He was integrally involved in the transition of LIPA’s T&D business to a new service provider under a public-private partnership model. Previously, he spent 18 years at Commonwealth Edison, working in T&D operations.