Duke Energy's Hybrid Approach to AI

Artificial intelligence systems provide promise in analyzing and evaluating power system data. There is currently a large push to use artificial intelligence (AI) and machine learning (ML) to help reduce the time it takes to perform maintenance on transformers and predict where and when the next transformer will fail.

Major companies in various industries are promoting and telling the wonders of AI and ML: managing the replacement plans of an aging or aged fleet, reduction in maintenance while extending asset life, operational efficiency — all while capturing the available expertise so it is not lost. These are lofty goals, and claims are being made already about the benefits of AI applications in the real world. The problem is that AI is not perfect — but it still has a role in the analysis of well-described problems with sufficient data to cover all possible situations that may be identified.

Now, consider two things that are true in the electric utility industry:

- Utilities are almost always faced with incomplete and possibly ambiguous data.

- Data analysis does not take place in a vacuum; utilities have a history and knowledge base to call on to check results.

In simple terms, if an AI system that analyzes data for power transformers is developed, then — based on the data available — it should be able to replicate what already has been developed as common knowledge or industry expertise. For example, in dissolved gas analysis (DGA), increased levels of acetylene with increased probability of failure should be set as a rule.

If the AI is unable to state the rule in clear terms, then utilities might not trust other analyses described. They must have a believable audit trail for the analysis to justify actions.

Business Environment

In an ideal world, utilities would have complete and detailed information on every transformer: maintenance history, test data, monitoring data, fault data and so forth. Standards and analytic tools would tell us about each individual transformer: health, probability of failure and remaining life, for example.

So, what can AI and ML do for electric utilities? Following are some examples of benefits:

- In weather forecasting, AI is used to reduce human error.

- Banks use AI in identity verification processes.

- Numerous institutions use AI to support help-line requests, sometimes in the form of chatbots.

- Siri, Cortana and Google Assistant are based on AI.

- AI systems can classify well-organized data, such as X-rays.

- On the downside, there are some issues with AI and ML:

- AI can be good at interpolation within a data set, but it might not be good at extrapolation to new data.

- “Giraffing” — the generic name for identifying the presence of objects where those objects do not exist — could provide bias in analysis based on

unrepresentative data sets. - Using a black-box approach could make the reason for a decision not clear and transparent.

In fact, many of the benefits of AI applications rely on having clean and well-ordered data. In terms of data mining, it is estimated 95% of the possible benefits can be achieved through data cleanup and standard statistical methods.

ML Types

In general, ML can be divided into two similar approaches, both requiring large data sets that are split into test and training subsets:

In supervised ML, an expert classifies the data set into different cases, for example, oil samples that indicate overheating or paper degradation. An ML tool tries to learn from parameters within the data — for example, hydrogen content, moisture level and presence of partial discharge (PD) — to determine which parameters best reflect the expert classification. The resulting tool can be tested against new cases to see how effective it is.

- In unsupervised ML, a similar approach is used, but in this case, the ML tool groups the cases based on clusters in the many dimensions of the data provided. An expert then classifies the resulting clusters and tests against new cases. As an example, consider an ML tool developed to recognize sheep and goats in pictures. In a supervised ML approach, an expert would classify each picture, and the tool would try to find data differences between the pictures that reflect the classification. It might not be clear why the tool does what it does, so the ML could be considered a black box. Once trained, an expert would show the ML tool more pictures for it to classify to see how well it does. If only pictures used in the training data are shown, it would likely do very well. However, if more complex pictures or pictures of another animal are shown, the ML tool might fail.

- In unsupervised ML, the tool clusters the data and the expert classifies it afterward. In both supervised and unsupervised ML tools, the ML tool performs very well when the test cases are like the training cases but not so well when the supplied cases are different than the training cases. What happens if there are multiple animals in a picture? Or, if there is a llama — how would that get classified? The effect called “giraffing”— where an ML tool trained to identify giraffes in supplied pictures then identifies giraffes in pictures where no giraffe is present — is a result of ML training where giraffes are overrepresented in the training cases but the cases of no giraffes are underrepresented.

The effect can be seen in a visual chatbot that identifies the content of pictures but cannot tell how many giraffes are in a supplied picture, for example.

EMI Spectra ML Classification

Dr. Imene Mitiche conducted a ML classification process for electromagnetic (EMI) spectra as part of a Doble-sponsored research and development project at Glasgow Caledonian University in the UK. Expert analysis of EMI spectra initially was used as the base for a supervised ML approach, where features extracted from the data — based on the entropy (orderliness) of the data — were used to cluster the data.

The improvement in results from the unsupervised approach demonstrates both the difficulty in classifying the spectra and the benefits of not assuming prior knowledge from the expert. The resulting ML system is being incorporated into Doble’s EMI survey tools to support users in the field with their analyses.

Standards and guidelines are available to support many analyses, noting these can be inconsistent and may not provide good interpretation in all cases. In practice, there is a need for entropic feature-extraction focus, as there is a lot of data. For example, Duke Energy has more than 10,000 large power transformers (banks > 7.5 MVA) in its transformer fleet. These transformers have dozens of data sources — from DGA and off-line tests to maintenance history and condition monitoring — and they generate millions of individual data points. Like most utilities, Duke Energy has ever-fewer people to manage the aging fleet, so it must be able to focus on what is most critical, most important and most relevant.

Practicalities At Duke Energy

Duke Energy performed exhaustive research over numerous years looking for a good AI/ML tool. By good, the utility meant one that classifies cases well when they are clear and identifies those that are less clear as needing further analysis. One thing in common with every ML solution Duke Energy was offered or tried for predictive maintenance was an assumption that, given enough data, it could make accurate predictions using Gaussian modeling of the available data.

Unfortunately, that assumption is not true. A Gaussian, or normal, distribution is symmetrical about an expected value. In practice, distributions of DGA values, power factor levels, PD inception voltages, and others are not Gaussian — and that trend follows through the analysis to the point of classification.

The realities of transformer data are as follows:

- It can be limited and bad.

- Failed-asset data has not been documented and maintained.

- There has been no investment in cleaning and verifying available data.

- Data has not been normalized across multiple sources nor within a single source.

- There are unique characteristics of data related to the manufacturing process for sister units (that is, they are handmade).

In addition, data scientists must contend with these realities:

- Answers are assumed to be in the available data, without necessarily referencing transformer experts.

- ML assumes a Gaussian data distribution, but most failure modes are not based on Gaussian data.

- Major companies like Dow Chemical, Audi and Intel have been open about predictive models for major plant assets not being effective.

- IT and data scientists do not usually understand failure modes and may not take them into account for their modeling.

Consequently, a lot of time, effort and resources can be spent on ML systems that do not support the real world. Based on experience and expert inputs, Duke Energy has developed a hybrid model that combines the best of available analysis tools and ML systems to enable experts and technicians to focus effectively and access data, so they can make the most accurate decisions where needed, with fewer things slipping through the cracks.

Scientific ML

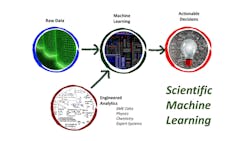

Duke’s hybrid model methodology development occurred at the same time as biologists and other scientific groups were developing similar techniques and finding pure ML did not produce accurate results in practice. The hybrid approach is scientific machine learning (SciML), where actionable decisions are made based on reliable data supported by subject matter expertise.

SciML is noted for needing less data, being better at generalization, and being more interpretable and reliable than both unsupervised and supervised ML. Duke Energy’s use of SciML went into effect in January 2019, while the terminology and papers on the concept from academic and commercial AI/ML platforms did not come into common use until later 2019 and 2020.

The first step in the development of a useful health and risk management (HRM) tool was to invest in data cleanup and subsequent data-hygiene management. This is an ongoing task and requires constant vigilance to prevent rogue data errors from causing false positives in analyses. Data is made available through a single-user interface, and standard engineering algorithms are applied to identify issues and data needing deeper analysis. Condition-based maintenance (CBM), load variation, oil test, electrical test and work order data all provide context in one interface for decision support.

Analytics such as the Doble Frank scores, TOA4 gassing scores and severity, and Electric Power Research Institute (EPRI) PTX indices are applied initially, and the results are normalized as a linear feature set that can be analyzed with a supervised ML tool. The combination of approaches allows data related to each transformer to be classified into one of several predefined classifications or states: normal, monitor, service, stable, replace and risk identified.

The SciML tool takes the best of both worlds, applies standards and guidelines, and benefits from the broad application of ML. The process at Duke Energy has reduced time for experts to perform annual fleet evaluations to a few days, rather than several weeks, in a consistent manner across the organization. The number of bad actors slipping through the cracks is lower, but not yet zero.

One of the features of the hybrid system is the ability of the system to change some states automatically:

- A state may be changed automatically to “monitor” or “service” based on raw data.

- The state may be changed to “risk identified” based on engineering analytics and ML classification.

- No transformer state can be automatically changed to “stable” or “replace,” as those states require expert intervention. After reviewing the data, the expert determines whether a transformer is stable or should be marked for replacement, with comments recorded.

Duke Energy’s hybrid model of engineered analytics and ML has proven to be an excellent but imperfect tool — far more accurate than either pure AI/ML tools or engineered analytics alone. The transformer state updated by experts is now far more useful in making sound planning decisions.

Success, in terms of uptake and use of the hybrid model, has been based on numerous activities: data hygiene, collation of data sources, application of standards and guidelines for engineered analytics, data normalization for features to feed the ML, continuous expert input and refinement in a closed-loop evaluation.

The hybrid approach has enabled experts and field technicians to focus on important and critical cases. The system is not perfect, but it has identified bad actors more consistently and more accurately than any previous approach used at Duke Energy.

Experts Are The Key

AI/ML tools can provide benefits in interpreting and classifying complex data, but they can be fooled by data that is inconsistent with their training set. ML tools require input from experts who can guide tool development in specific applications.

Understanding the raw data and making the best use of data-hygiene and data-management activities is a base for building an overall analysis system that combines best practices, application of standards and guidelines, and targeted use of AI/ML systems. Doble Engineering has shown developing targeted AI/ML tools can bring benefits to practical data analysis in the field and applying targeted ML tools can support experts in their asset performance analyses.

Acknowledgments

The authors would like to thank their colleagues at Duke Energy, Doble Engineering Co. and many more across the industry who have provided comments, feedback and discussion of the application of AI techniques. Many thanks to Dr. Mitiche at Glasgow Caledonian University for sharing her results of AI analysis of PD/EMI data.

This article was provided by the InterNational Electrical Testing Association. NETA was formed in 1972 to establish uniform testing procedures for electrical equipment and systems. Today the association accredits electrical testing companies; certifies electrical testing technicians; publishes the ANSI/NETA Standards for Acceptance Testing, Maintenance Testing, Commissioning, and the Certification of Electrical Test Technicians; and provides training through its annual PowerTest Conference and library of educational resources.

This article is published in tribute to Tom Rhodes who sadly passed away recently.

Dr. Tony McGrail of Doble Engineering Company provides condition, criticality, and risk analysis for substation owner/operators. Previously, he spent over 10 years with National Grid in the UK and the US as a Substation Equipment Specialist, with a focus on power transformers, circuit breakers, and integrated condition monitoring. Tony also took on the role of Substation Asset Manager to identify risks and opportunities for investment in an ageing infrastructure. He is an IET Fellow, past-Chairman of the IET Council, a member of IEEE, ASTM, ISO, CIGRE, and IAM, and a contributor to SFRA and other standards.

Tom Rhodes graduated from the Upper Iowa University with a BS in professional chemistry. He had over 30 years of data analysis for asset management of industrial systems. Rhodes worked as Implementer/Project Leader at CHAMPS Software implementing new CMMS/asset management technology, and held titles of Sr. Science and Lab Services Specialist, Scientist, and Lead Engineering Technologist at Duke Energy.

About the Author

Tom Rhodes

Tom Rhodes graduated from the Upper Iowa University with a BS in professional chemistry. He had over 30 years of data analysis for asset management of industrial systems. Tom worked as Implementer/Project Leader at CHAMPS Software implementing new CMMS/asset management technology, and held titles of Sr. Science and Lab Services Specialist, Scientist, and Lead Engineering Technologist at Duke Energy. He was an author and regular presenter at Doble, IEEE, Distributec, and ARC conferences on oil analysis and asset management.

Tony McGrail

Tony McGrail is solutions director for asset management and monitoring technology at Doble Engineering Co., providing condition, criticality and risk analysis for utilities. Previously, he spent over 10 years with National Grid in the UK and the U.S., as both a substation equipment specialist and an asset policy manager, identifying risks and opportunities for investment in an aging infrastructure. McGrail is chair of the IEEE working group on asset management, a member of IET, CIGRE and IAM, as well as a contributor to SFRA and other standards at IEEE, IEC and CIGRE. His initial degree was in physics, supplemented by a master’s degree and a doctorate in electrical engineering as well as an MBA degree. McGrail is an adjunct professor at Worcester Polytechnic Institute, Massachusetts, leading courses in power systems analysis.